Xu, W. (2019). Toward human-centered AI: A perspective from human-computer interaction. Interactions, 26(4), 42–46. https://doi.org/10.1145/3328485

“Machine learning (ML)-based AI systems trained with incomplete or distorted data (i.e., their “worldview”) can lead to biased “thinking,” which may in turn magnify prejudice and inequality, spread rumors and fake news, and even cause physical harm. Because of these concerns, some large AI projects never came to fruition.” (Xu, 2019, p. 42) 🔤基于机器学习 (ML) 的 AI 系统使用不完整或扭曲的数据(即它们的“世界观”)进行训练,可能会导致有偏见的“思考”,这反过来可能会放大偏见和不平等,传播谣言和假新闻,甚至导致身体不适伤害。由于这些担忧,一些大型人工智能项目从未取得成果。🔤

“This means that as AI is developed further, attention should be given not only to the technology itself but also to other important nontechnical factors.” (Xu, 2019, p. 42) 🔤这意味着随着人工智能的进一步发展,不仅要关注技术本身,还要关注其他重要的非技术因素。🔤

“As the core technology of AI, ML and its learning process are opaque, and the output of AI-based decisions is not intuitive.” (Xu, 2019, p. 43) 🔤作为人工智能的核心技术,机器学习及其学习过程是不透明的,基于人工智能的决策输出并不直观。🔤

“Because of the black-box effect, AI solutions are not explainable and comprehensible to users.” (Xu, 2019, p. 43) 🔤由于黑盒效应,人工智能解决方案对用户来说是无法解释和理解的。🔤

“Explainable AI (XAI) enables users to understand the algorithm and parameters used, which is intended to address the AI black-box problem.” (Xu, 2019, p. 44) 🔤Explainable AI (XAI) 使用户能够理解所使用的算法和参数,旨在解决 AI 黑盒问题。🔤

“Previous research on XAI had mainly been done in two ways: visualization of ML processes and explainable ML algorithms.” (Xu, 2019, p. 44) 🔤之前对 XAI 的研究主要通过两种方式进行:ML 过程的可视化和可解释的 ML 算法。🔤

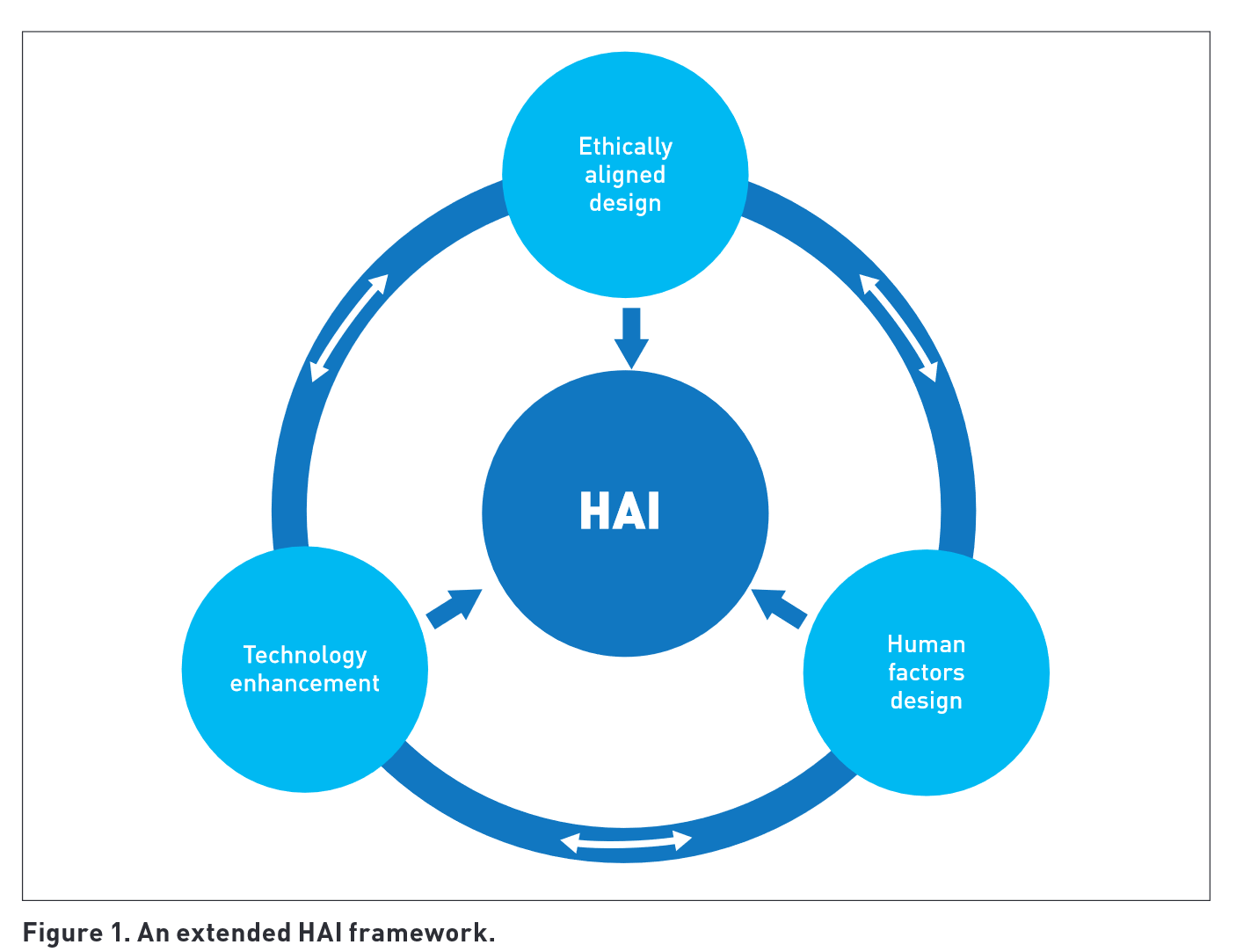

“The framework includes three main components: 1) ethically aligned design, which creates AI solutions that avoid discrimination, maintain fairness and justice, and do not replace humans; 2) technology that fully reflects human intelligence, which further enhances AI technology to reflect the depth characterized by human intelligence (more like human intelligence); and 3) human factors design to ensure that AI solutions are explainable, comprehensible, useful, and usable.” (Xu, 2019, p. 44) 🔤该框架包括三个主要组成部分:1)符合伦理的设计,创造避免歧视、维护公平正义、不取代人类的人工智能解决方案; 2)充分体现人类智能的技术,进一步增强了AI技术以体现人类智能(更像人类智能)特征的深度; 3) 人为因素设计,以确保人工智能解决方案是可解释的、可理解的、有用的和可用的。🔤

“The ultimate goal of XAI should be to ensure that target users can understand the outputs, thus helping them improve their decisionmaking efficiency.” (Xu, 2019, p. 45) 🔤XAI的最终目标应该是确保目标用户能够理解输出,从而帮助他们提高决策效率。🔤

“Finally, HCI professionals can drive rigorous user-involved behavioral experimental methods to validate proposed research protocols, which was overlooked in most of the previous research driven by AI professionals.” (Xu, 2019, p. 45) 🔤最后,HCI 专业人员可以推动严格的用户参与行为实验方法来验证所提出的研究协议,这在以前由 AI 专业人员推动的大多数研究中都被忽视了。🔤

“HCI professionals are good at identifying usage scenarios based on HCI methods such as ethnographic studies and contextual inquiries, and helping mine user needs, behavioral patterns, and usage scenarios. For example, using AI and big data to model real-time user behaviors, and digital user personas to identify potential user needs and real-world usage scenarios.” (Xu, 2019, p. 45) 🔤人机交互专业人士擅长基于人种学研究、情境查询等人机交互方法识别使用场景,帮助挖掘用户需求、行为模式和使用场景。例如,使用人工智能和大数据对实时用户行为进行建模,使用数字用户画像来识别潜在的用户需求和真实世界的使用场景。🔤

“The dynamic cooperation between the two cognitive agents with enhanced capability on the machine side (as it learns over time) brings added complexity to the HCI design of AI solutions. There are a series of questions that require systematic HCI research.” (Xu, 2019, p. 45) 🔤两个具有增强机器端能力的认知代理之间的动态合作(随着时间的推移学习)为人工智能解决方案的 HCI 设计带来了更多的复杂性。有一系列的问题需要系统的人机交互研究。🔤

“By following a human-centered ML approach as an example, AI and HCI professionals can work together to define UX criteria, test/optimize ML training data and algorithms iteratively, and avoid extreme algorithmic bias.” (Xu, 2019, p. 46) 🔤以以人为本的 ML 方法为例,AI 和 HCI 专业人员可以共同定义 UX 标准,迭代测试/优化 ML 训练数据和算法,并避免极端的算法偏差。🔤

“This requires a systematic consideration of ethically aligned design, technology that fully reflects human intelligence, and human factors design.” (Xu, 2019, p. 46) 🔤这需要系统地考虑符合伦理的设计、充分反映人类智慧的技术和人因设计。🔤

“Finally, current AI-related standards focus primarily on ethical design issues, such as the guidelines published by IEEE. There are currently no specific HCI design standards for guiding AI solutions; the HCI community needs to develop these.” (Xu, 2019, p. 46) 🔤最后,当前与 AI 相关的标准主要关注道德设计问题,例如 IEEE 发布的指南。目前没有具体的 HCI 设计标准来指导 AI 解决方案; HCI 社区需要开发这些。🔤